Large Language Models (LLMs) capable of complex reasoning tasks have shown promise in specialized domains like programming and creative writing. However, the world of LLMs isn’t simply a plug-and-play paradise; there are challenges in usability, safety, and computational demands. In this article, we will dive deep into the capabilities of Llama 2, while providing a detailed walkthrough for setting up this high-performing LLM via Hugging Face and T4 GPUs on Google Colab.

Developed by Meta with its partnership with Microsoft, this open-source large language model aims to redefine the realms of generative AI and natural language understanding. Llama 2 isn’t just another statistical model trained on terabytes of data; it’s an embodiment of a philosophy. One that stresses an open-source approach as the backbone of AI development, particularly in the generative AI space.

Llama 2 and its dialogue-optimized substitute, Llama 2-Chat, come equipped with up to 70 billion parameters. They undergo a fine-tuning process designed to align them closely with human preferences, making them both safer and more effective than many other publicly available models. This level of granularity in fine-tuning is often reserved for closed “product” LLMs, such as ChatGPT and BARD, which are not generally available for public scrutiny or customization.

Technical Deep Dive of Llama 2

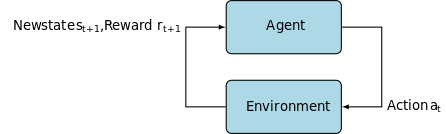

For training the Llama 2 model; like its predecessors, it uses an auto-regressive transformer architecture, pre-trained on an extensive corpus of self-supervised data. However, it adds an additional layer of sophistication by using Reinforcement Learning with Human Feedback (RLHF) to better align with human behavior and preferences. This is computationally expensive but vital for improving the model’s safety and effectiveness.

Meta Llama 2 training architecture

Pretraining & Data Efficiency

Llama 2’s foundational innovation lies in its pretraining regime. The model takes cues from its predecessor, Llama 1, but introduces several crucial enhancements to elevate its performance. Notably, a 40% increase in the total number of tokens trained and a twofold expansion in context length stand out. Moreover, the model leverages grouped-query attention (GQA) to amplify inference scalability.

Supervised Fine-Tuning (SFT) & Reinforcement Learning with Human Feedback (RLHF)

Llama-2-chat has been rigorously fine-tuned using both SFT and Reinforcement Learning with Human Feedback (RLHF). In this context, SFT serves as an integral component of the RLHF framework, refining the model’s responses to align closely with human preferences and expectations.

OpenAI has provided an insightful illustration that explains the SFT and RLHF methodologies employed in InstructGPT. Much like LLaMa 2, InstructGPT also leverages these advanced training techniques to optimize its model’s performance.

Step 1 in the below image focuses on Supervised Fine-Tuning (SFT), while the subsequent steps complete the Reinforcement Learning from Human Feedback (RLHF) process.

Instruction-GPT

Supervised Fine-Tuning (SFT) is a specialized process aimed at optimizing a pre-trained Large Language Model (LLM) for a specific downstream task. Unlike unsupervised methods, which don’t require data validation, SFT employs a dataset that has been pre-validated and labeled.

Generally crafting these datasets is costly and time-consuming. Llama 2 approach was quality over quantity. With just 27,540 annotations, Meta’s team achieved performance levels competitive with human annotators. This aligns well with recent studies showing that even limited but clean datasets can drive high-quality outcomes.

In the SFT process, the pre-trained LLM is exposed to a labeled dataset, where the supervised learning algorithms come into play. The model’s internal weights are recalibrated based on gradients calculated from a task-specific loss function. This loss function quantifies the discrepancies between the model’s predicted outputs and the actual ground-truth labels.

This optimization allows the LLM to grasp the intricate patterns and nuances embedded within the labeled dataset. Consequently, the model is not just a generalized tool but evolves into a specialized asset, adept at performing the target task with a high degree of accuracy.

Reinforcement learning is the next step, aimed at aligning model behavior with human preferences more closely.

The tuning phase leveraged Reinforcement Learning from Human Feedback (RLHF), employing techniques like Importance Sampling and Proximal Policy Optimization to introduce algorithmic noise, thereby evading local optima. This iterative fine-tuning not only improved the model but also aligned its output with human expectations.

The Llama 2-Chat used a binary comparison protocol to collect human preference data, marking a notable trend towards more qualitative approaches. This mechanism informed the Reward Models, which are then used to fine-tune the conversational AI model.

Ghost Attention: Multi-Turn Dialogues

Meta introduced a new feature, Ghost Attention (GAtt) which is designed to enhance Llama 2’s performance in multi-turn dialogues. This effectively resolves the persistent issue of context loss in ongoing conversations. GAtt acts like an anchor, linking the initial instructions to all subsequent user messages. Coupled with reinforcement learning techniques, it aids in producing consistent, relevant, and user-aligned responses over longer dialogues.

From Meta Git Repository Using download.sh

- Visit the Meta Website: Navigate to Meta’s official Llama 2 site and click ‘Download The Model’

- Fill in the Details: Read through and accept the terms and conditions to proceed.

- Email Confirmation: Once the form is submitted, you’ll receive an email from Meta with a link to download the model from their git repository.

- Execute download.sh: Clone the Git repository and execute the

download.shscript. This script will prompt you to authenticate using a URL from Meta that expires in 24 hours. You’ll also choose the size of the model—7B, 13B, or 70B.

From Hugging Face

- Receive Acceptance Email: After gaining access from Meta, head over to Hugging Face.

- Request Access: Choose your desired model and submit a request to grant access.

- Confirmation: Expect a ‘granted access’ email within 1-2 days.

- Generate Access Tokens: Navigate to ‘Settings’ in your Hugging Face account to create access tokens.

Transformers 4.31 release is fully compatible with LLaMa 2 and opens up many tools and functionalities within the Hugging Face ecosystem. From training and inference scripts to 4-bit quantization with bitsandbytes and Parameter Efficient Fine-tuning (PEFT), the toolkit is extensive. To get started, make sure you’re on the latest Transformers release and logged into your Hugging Face account.

Here’s a streamlined guide to running LLaMa 2 model inference in a Google Colab environment, leveraging a GPU runtime:

Google Colab Model – T4 GPU

Package Installation

!pip install transformers !huggingface-cli login

Import the necessary Python libraries.

from transformers import AutoTokenizer import transformers import torch

Initialize the Model and Tokenizer

In this step, specify which Llama 2 model you’ll be using. For this guide, we use meta-llama/Llama-2-7b-chat-hf.

model = "meta-llama/Llama-2-7b-chat-hf" tokenizer = AutoTokenizer.from_pretrained(model)

Set up the Pipeline

Utilize the Hugging Face pipeline for text generation with specific settings:

pipeline = transformers.pipeline(

"text-generation",

model=model,

torch_dtype=torch.float16,

device_map="auto")

Generate Text Sequences

Finally, run the pipeline and generate a text sequence based on your input:

sequences = pipeline(

'Who are the key contributors to the field of artificial intelligence?\n',

do_sample=True,

top_k=10,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

max_length=200)

for seq in sequences:

print(f"Result: {seq['generated_text']}")

A16Z’s UI for LLaMa 2

Andreessen Horowitz (A16Z) has recently launched a cutting-edge Streamlit-based chatbot interface tailored for Llama 2. Hosted on GitHub, this UI preserves session chat history and also provides the flexibility to select from multiple Llama 2 API endpoints hosted on Replicate. This user-centric design aims to simplify interactions with Llama 2, making it an ideal tool for both developers and end-users. For those interested in experiencing this, a live demo is available at Llama2.ai.

LLaMa2.ai

Llama 2: What makes it different from GPT Models and its predecessor Llama 1?

Variety in Scale

Unlike many language models that offer limited scalability, Llama 2 gives you a bunch of different options for models with varied parameters. The model scales from 7 billion to 70 billion parameters, thereby providing a range of configurations to suit diverse computational needs.

Enhanced Context Length

The model has an increased context length of 4K tokens than Llama 1. This allows it to retain more information, thus enhancing its ability to understand and generate more complex and extensive content.

Grouped Query Attention (GQA)

The architecture uses the concept of GQA, designed to fasten the attention computation process by caching previous token pairs. This effectively improves the model’s inference scalability to enhance accessibility.

Performance Benchmarks

Performance Analysis of Llama 2-Chat Models with ChatGPT and Other Competitors

LLama 2 has set a new standard in performance metrics. It not only outperforms its predecessor, LLama 1 but also offers significant competition to other models like Falcon and GPT-3.5.

Llama 2-Chat’s largest model, the 70B, also outperforms ChatGPT in 36% of instances and matches performance in another 31.5% of cases. Source: Paper

Open Source: The Power of Community

Meta and Microsoft intend for Llama 2 to be more than just a product; they envision it as a community-driven tool. Llama 2 is free to access for both research and non-commercial purposes. The are aiming to democratize AI capabilities, making it accessible to startups, researchers, and businesses. An open-source paradigm allows for the ‘crowdsourced troubleshooting’ of the model. Developers and AI ethicists can stress test, identify vulnerabilities, and offer solutions at an accelerated pace.

While the licensing terms for LLaMa 2 are generally permissive, exceptions do exist. Large enterprises boasting over 700 million monthly users, such as Google, require explicit authorization from Meta for its utilization. Additionally, the license prohibits the use of LLaMa 2 for the improvement of other language models.

Current Challenges with Llama 2

- Data Generalization: Both Llama 2 and GPT-4 sometimes falter in uniformly high performance across divergent tasks. Data quality and diversity are just as pivotal as volume in these scenarios.

- Model Transparency: Given prior setbacks with AI producing misleading outputs, exploring the decision-making rationale behind these complex models is paramount.

Code Llama – Meta’s Latest Launch

Meta recently announced Code Llama which is a large language model specialized in programming with parameter sizes ranging from 7B to 34B. Similar to ChatGPT Code Interpreter; Code Llama can streamline developer workflows and make programming more accessible. It accommodates various programming languages and comes in specialized variations, such as Code Llama–Python for Python-specific tasks. The model also offers different performance levels to meet diverse latency requirements. Openly licensed, Code Llama invites community input for ongoing improvement.

Introducing Code Llama, an AI Tool for Coding

Conclusion

This article has walked you through setting up a Llama 2 model for text generation on Google Colab with Hugging Face support. Llama 2’s performance is fueled by an array of advanced techniques from auto-regressive transformer architectures to Reinforcement Learning with Human Feedback (RLHF). With up to 70 billion parameters and features like Ghost Attention, this model outperforms current industry standards in certain areas, and with its open nature, it paves the way for a new era in natural language understanding and generative AI.

Credit: Source link