Nvidia‘s (NASDAQ: NVDA) stunning artificial intelligence (AI) surge has left investors and analysts in awe. Shares of the chipmaker, which originally made its name as a manufacturer of graphics cards for personal computers (PCs), have jumped nearly sixfold since the beginning of 2023.

However, this big jump has created doubts in certain corners of Wall Street that Nvidia stock may be in a bubble. From comparisons with the dot-com bubble of 1999 to a potential decline in AI-related demand for its chips, to its expensive valuation, there are multiple reasons why some believe that Nvidia is a bubble waiting to burst.

But a closer look at the AI market in general and Nvidia in particular will illustrate why the company is far from being in a bubble.

Why it isn’t right to call Nvidia, and AI, a bubble

A stock market bubble is a “significant run-up in stock prices without a corresponding increase in the value of the businesses they represent.” In a bubble, the valuation of a company is based on speculation instead of the actual fundamentals.

However, if you take a closer look at how AI is driving productivity gains across multiple industries, it will become easier to understand that the adoption of this technology should ideally continue gaining momentum. For instance, Meta Platforms says that the integration of AI tools has led to an impressive jump of 32% in returns delivered by ad campaigns. Meanwhile, customer service associates are reportedly witnessing a 14% increase in productivity thanks to AI.

Factories, on the other hand, are expected to witness a 30% to 50% jump in productivity in the future by integrating AI, according to Bain & Company. Investment bank UBS believes that AI could drive productivity growth of 2.5% this year, ahead of the Federal Reserve’s estimate of 1.5%. Over the next three years, UBS is expecting AI to deliver 17% of productivity gains.

Nvidia’s chips are going to play a central role in driving these productivity gains across different industries. That’s because AI models need to be trained using millions and billions of parameters before they can be deployed in the real world. These models are known as large language models (LLMs), and they are being deployed across multiple verticals from manufacturing to automotive to cloud computing.

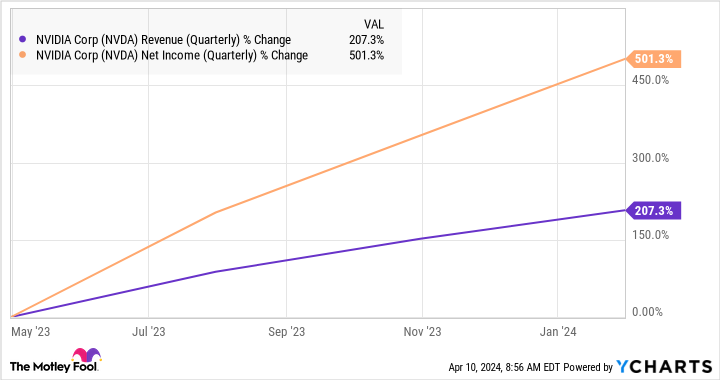

Training these LLMs requires massive computing power, which Nvidia’s GPUs provide. This explains why companies have been queuing up to get their hands on Nvidia’s flagship H100 processor, giving the chipmaker a monopoly-like position in the AI chip market with an estimated share of over 90%. The H100 sells for $25,000 to $30,000, and Nvidia reportedly makes a 1,000% profit on these cards as per Raymond James. This explains the outstanding growth in Nvidia’s revenue and earnings.

So, the sharp jump in Nvidia’s stock price is not based on speculation or euphoria, but it is backed by the eye-popping growth in the company’s revenue and earnings. The good part is that Nvidia seems capable of sustaining its outstanding growth over the long run, and the U.S. government is likely to play a key role in helping it remain the dominant force in the AI chip market.

A new grant by the U.S. government could help Nvidia maintain its AI supremacy

Nvidia’s H100 processor, based on the Hopper architecture, commands solid pricing power, and that’s not surprising given that it has been the go-to chip for customers looking to train AI models. As it turns out, the demand for this chip was so strong at one point that customers had to wait for as long as a year to get their hands on it.

Nvidia is now set to bring a new chip architecture to the market, known as Blackwell. The B200 Blackwell graphics card, which will be the successor to the H100 once it is launched later this year, is reportedly going to deliver performance gains of 7x to 30x over the H100. Nvidia also claims that it will reduce energy consumption by up to 25x.

This performance gain isn’t surprising as Blackwell is reportedly going to be manufactured using a custom 4-nanometer (nm) node from Taiwan Semiconductor Manufacturing, popularly known as TSMC. For comparison, the Hopper-based H100 was manufactured using a custom 5nm process from TSMC. By shrinking the size of the process node, TSMC has allowed Nvidia to pack 208 billion transistors as compared to 80 billion transistors in the H100.

These transistors are now packed more closely together on the chip, and as a result they deliver more computing power and generate less heat, thereby reducing electricity consumption. And now, TSMC has received a $6.6 billion grant from the U.S. government, along with a $5 billion low-cost loan facility, to build a third chip plant in Arizona.

TSMC is expected to use these funds to build a 2nm chip plant. Given that Nvidia is expected to be one of the customers for TSMC’s 2nm chips, which are expected to go into mass production in 2025, it won’t be surprising to see the graphics specialist coming out with even more powerful AI graphics cards. This explains why analysts are expecting Nvidia’s data center revenue to multiply nicely in the coming years.

What’s more, Nvidia’s earnings are expected to increase at an annual rate of 35% for the next five years, as per consensus estimates. Based on the company’s fiscal 2024 earnings of $12.96 per share, its bottom line could jump to $58.11 per share after five years.

Nvidia has a five-year average forward earnings multiple of 39, which is slightly higher than its forward earnings multiple of 36. But even if Nvidia trades at a discounted 27 times forward earnings after five years (in line with the Nasdaq-100‘s forward earnings multiple, as a proxy for tech stocks), its stock price could jump to $1,569. That would be an 85% increase from current levels.

However, don’t be surprised to see this AI stock delivering stronger gains. Nvidia can outpace Wall Street’s earnings growth expectations and the market could continue rewarding it with a premium valuation as its product development moves should ideally help it remain the top player in the lucrative AI chip market.

Should you invest $1,000 in Nvidia right now?

Before you buy stock in Nvidia, consider this:

The Motley Fool Stock Advisor analyst team just identified what they believe are the 10 best stocks for investors to buy now… and Nvidia wasn’t one of them. The 10 stocks that made the cut could produce monster returns in the coming years.

Consider when Nvidia made this list on April 15, 2005… if you invested $1,000 at the time of our recommendation, you’d have $540,321!*

Stock Advisor provides investors with an easy-to-follow blueprint for success, including guidance on building a portfolio, regular updates from analysts, and two new stock picks each month. The Stock Advisor service has more than quadrupled the return of S&P 500 since 2002*.

See the 10 stocks »

*Stock Advisor returns as of April 8, 2024

Randi Zuckerberg, a former director of market development and spokeswoman for Facebook and sister to Meta Platforms CEO Mark Zuckerberg, is a member of The Motley Fool’s board of directors. Harsh Chauhan has no position in any of the stocks mentioned. The Motley Fool has positions in and recommends Meta Platforms, Nvidia, and Taiwan Semiconductor Manufacturing. The Motley Fool has a disclosure policy.

Nvidia Is Not in a Bubble: You Should Be Buying This Artificial Intelligence (AI) Stock Hand Over Fist Before It Soars was originally published by The Motley Fool

Credit: Source link