In the ongoing effort to make AI more like humans, OpenAI’s GPT models have continually pushed the boundaries. GPT-4 is now able to accept prompts of both text and images.

Multimodality in generative AI denotes a model’s capability to produce varied outputs like text, images, or audio based on the input. These models, trained on specific data, learn underlying patterns to generate similar new data, enriching AI applications.

Recent Strides in Multimodal AI

A recent notable leap in this field is seen with the integration of DALL-E 3 into ChatGPT, a significant upgrade in OpenAI’s text-to-image technology. This blend allows for a smoother interaction where ChatGPT aids in crafting precise prompts for DALL-E 3, turning user ideas into vivid AI-generated art. So, while users can directly interact with DALL-E 3, having ChatGPT in the mix makes the process of creating AI art much more user-friendly.

Check out more on DALL-E 3 and its integration with ChatGPT here. This collaboration not only showcases the advancement in multimodal AI but also makes AI art creation a breeze for users.

https://openai.com/dall-e-3

Google’s health on the other hand introduced Med-PaLM M in June this year. It is a multimodal generative model adept at encoding and interpreting diverse biomedical data. This was achieved by fine-tuning PaLM-E, a language model, to cater to medical domains utilizing an open-source benchmark, MultiMedBench. This benchmark, consists of over 1 million samples across 7 biomedical data types and 14 tasks like medical question-answering and radiology report generation.

Various industries are adopting innovative multimodal AI tools to fuel business expansion, streamline operations, and elevate customer engagement. Progress in voice, video, and text AI capabilities is propelling multimodal AI’s growth.

Enterprises seek multimodal AI applications capable of overhauling business models and processes, opening growth avenues across the generative AI ecosystem, from data tools to emerging AI applications.

Post GPT-4’s launch in March, some users observed a decline in its response quality over time, a concern echoed by notable developers and on OpenAI’s forums. Initially dismissed by an OpenAI, a later study confirmed the issue. It revealed a drop in GPT-4’s accuracy from 97.6% to 2.4% between March and June, indicating a decline in answer quality with subsequent model updates.

ChatGPT (Blue) & Artificial intelligence (Red) Google Search Trend

The hype around Open AI’s ChatGPT is back now. It now comes with a vision feature GPT-4V, allowing users to have GPT-4 analyze images given by them. This is the newest feature that’s been opened up to users.

Adding image analysis to large language models (LLMs) like GPT-4 is seen by some as a big step forward in AI research and development. This kind of multimodal LLM opens up new possibilities, taking language models beyond text to offer new interfaces and solve new kinds of tasks, creating fresh experiences for users.

The training of GPT-4V was finished in 2022, with early access rolled out in March 2023. The visual feature in GPT-4V is powered by GPT-4 tech. The training process remained the same. Initially, the model was trained to predict the next word in a text using a massive dataset of both text and images from various sources including the internet.

Later, it was fine-tuned with more data, employing a method named reinforcement learning from human feedback (RLHF), to generate outputs that humans preferred.

GPT-4 Vision Mechanics

GPT-4’s remarkable vision language capabilities, although impressive, have underlying methods that remains on the surface.

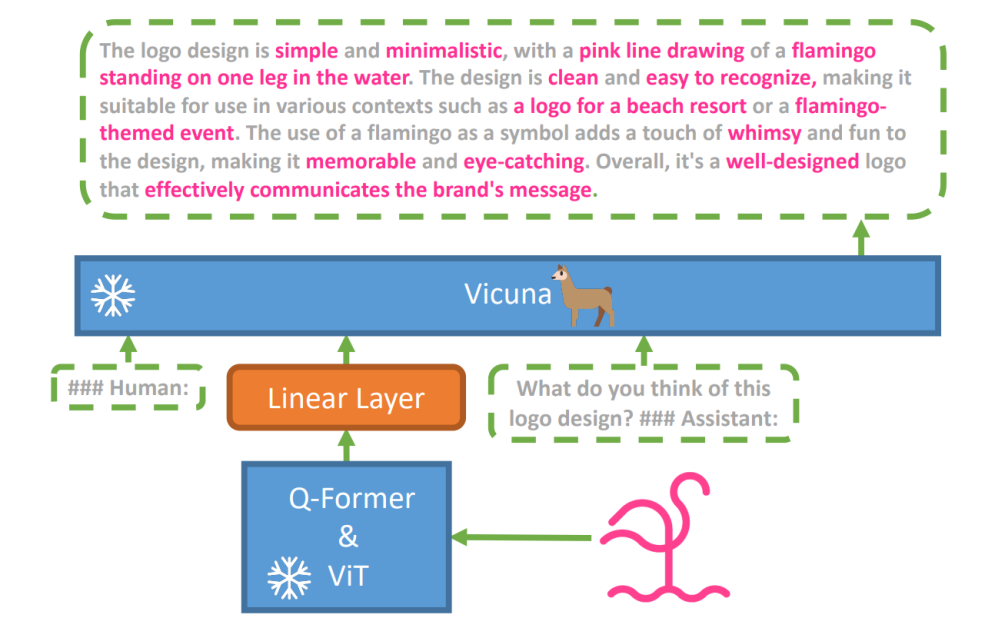

To explore this hypothesis, a new vision-language model, MiniGPT-4 was introduced, utilizing an advanced LLM named Vicuna. This model uses a vision encoder with pre-trained components for visual perception, aligning encoded visual features with the Vicuna language model through a single projection layer. The architecture of MiniGPT-4 is simple yet effective, with a focus on aligning visual and language features to improve visual conversation capabilities.

MiniGPT-4’s architecture includes a vision encoder with pre-trained ViT and Q-Former, a single linear projection layer, and an advanced Vicuna large language model.

The trend of autoregressive language models in vision-language tasks has also grown, capitalizing on cross-modal transfer to share knowledge between language and multimodal domains.

MiniGPT-4 bridge the visual and language domains by aligning visual information from a pre-trained vision encoder with an advanced LLM. The model utilizes Vicuna as the language decoder and follows a two-stage training approach. Initially, it’s trained on a large dataset of image-text pairs to grasp vision-language knowledge, followed by fine-tuning on a smaller, high-quality dataset to enhance generation reliability and usability.

To improve the naturalness and usability of generated language in MiniGPT-4, researchers developed a two-stage alignment process, addressing the lack of adequate vision-language alignment datasets. They curated a specialized dataset for this purpose.

Initially, the model generated detailed descriptions of input images, enhancing the detail by using a conversational prompt aligned with Vicuna language model’s format. This stage aimed at generating more comprehensive image descriptions.

Initial Image Description Prompt:

###Human: <Img><ImageFeature></Img>Describe this image in detail. Give as many details as possible. Say everything you see. ###Assistant:

For data post-processing, any inconsistencies or errors in the generated descriptions were corrected using ChatGPT, followed by manual verification to ensure high quality.

Second-Stage Fine-tuning Prompt:

###Human: <Img><ImageFeature></Img><Instruction>###Assistant:

This exploration opens a window into understanding the mechanics of multimodal generative AI like GPT-4, shedding light on how vision and language modalities can be effectively integrated to generate coherent and contextually rich outputs.

Exploring GPT-4 Vision

Determining Image Origins with ChatGPT

GPT-4 Vision enhances ChatGPT’s ability to analyze images and pinpoint their geographical origins. This feature transitions user interactions from just text to a mix of text and visuals, becoming a handy tool for those curious about different places through image data.

Asking ChatGPT where a Landmark Image is taken

Complex Math Concepts

GPT-4 Vision excels in delving into complex mathematical ideas by analyzing graphical or handwritten expressions. This feature acts as a useful tool for individuals looking to solve intricate mathematical problems, marking GPT-4 Vision a notable aid in educational and academic fields.

Asking ChatGPT to understand a complex math concept

Converting Handwritten Input to LaTeX Codes

One of GPT-4V’s remarkable abilities is its capability to translate handwritten inputs into LaTeX codes. This feature is a boon for researchers, academics, and students who often need to convert handwritten mathematical expressions or other technical information into a digital format. The transformation from handwritten to LaTeX expands the horizon of document digitization and simplifies the technical writing process.

GPT-4V’s ability to convert handwritten input into LaTeX codes

Extracting Table Details

GPT-4V showcases skill in extracting details from tables and addressing related inquiries, a vital asset in data analysis. Users can utilize GPT-4V to sift through tables, gather key insights, and resolve data-driven questions, making it a robust tool for data analysts and other professionals.

GPT-4V deciphering table details and responding to related queries

Comprehending Visual Pointing

The unique ability of GPT-4V to comprehend visual pointing adds a new dimension to user interaction. By understanding visual cues, GPT-4V can respond to queries with a higher contextual understanding.

GPT-4V showcases the distinct ability to comprehend visual pointing

Building Simple Mock-Up Websites using a drawing

Motivated by this tweet, I attempted to create a mock-up for the unite.ai website.

While the outcome didn’t quite match my initial vision, here’s the result I achieved.

ChatGPT Vision based output HTML Frontend

Limitations & Flaws of GPT-4V(ision)

To analyze GPT-4V, Open AI team carried qualitative and quantitative assessments. Qualitative ones included internal tests and external expert reviews, while quantitative ones measured model refusals and accuracy in various scenarios such as identifying harmful content, demographic recognition, privacy concerns, geolocation, cybersecurity, and multimodal jailbreaks.

Still the model is not perfect.

The paper highlights limitations of GPT-4V, like incorrect inferences and missing text or characters in images. It may hallucinate or invent facts. Particularly, it’s not suited for identifying dangerous substances in images, often misidentifying them.

In medical imaging, GPT-4V can provide inconsistent responses and lacks awareness of standard practices, leading to potential misdiagnoses.

Unreliable performance for medical purposes (Source)

It also fails to grasp the nuances of certain hate symbols and may generate inappropriate content based on the visual inputs. OpenAI advises against using GPT-4V for critical interpretations, especially in medical or sensitive contexts.

The arrival of GPT-4 Vision (GPT-4V) brings along a bunch of cool possibilities and new hurdles to jump over. Before rolling it out, a lot of effort has gone into making sure risks, especially when it comes to pictures of people, are well looked into and reduced. It’s impressive to see how GPT-4V has stepped up, showing a lot of promise in tricky areas like medicine and science.

Now, there are some big questions on the table. For instance, should these models be able to identify famous folks from photos? Should they guess a person’s gender, race, or feelings from a picture? And, should there be special tweaks to help visually impaired individuals? These questions open up a can of worms about privacy, fairness, and how AI should fit into our lives, which is something everyone should have a say in.

Credit: Source link