Imagine looking for similar things based on deeper insights instead of just keywords. That’s what vector databases and similarity searches help with. Vector databases enable vector similarity search. It uses the distance between vectors to find data points in search queries.

However, similarity search in high-dimensional data can be slow and resource-intensive. Enter Quantization techniques! They play an important role in optimizing data storage and accelerating data retrieval in vector databases.

This article explores various quantization techniques, their types, and real-world use cases.

What is Quantization and How Does it Work?

Quantization is the process of converting continuous data into discrete data points. Especially when you’re dealing with billion-scale parameters, quantization is essential for managing and processing. In vector databases, quantization transforms high-dimensional data into compressed space while preserving important features and vector distances.

Quantization significantly reduces memory bottlenecks and improves storage efficiency.

The process of quantization includes three key processes:

1. Compressing High-Dimensional Vectors

In quantization, we use techniques like codebook generation, feature engineering, and encoding. These techniques compress high-dimensional vector embeddings into a low-dimensional subspace. In other words, the vector is split into numerous subvectors. Vector embeddings are numerical representations of audio, images, videos, text, or signal data, enabling easier processing.

2. Mapping to Discrete Values

This step involves mapping the low-dimensional subvectors to discrete values. The mapping further reduces the number of bits of each subvector.

3. Compressed Vector Storage

Finally, the mapped discrete values of the subvectors are placed in the database for the original vector. Compressed data representing the same information in fewer bits optimizes its storage.

Benefits of Quantization for Vector Databases

Quantization offers a range of benefits, resulting in improved computation and reduced memory footprint.

1. Efficient Scalable Vector Search

Quantization optimizes the vector search by reducing the comparison computation cost. Therefore, vector search requires fewer resources, improving its overall efficiency.

2. Memory Optimization

Quantized vectors allows you to store more data within the same space. Furthermore, data indexing and search are also optimized.

3. Speed

With efficient storage and retrieval comes faster computation. Reduced dimensions allow faster processing, including data manipulation, querying, and predictions.

Some popular vector databases like Qdrant, Pinecone, and Milvus offer various quantization techniques with different use cases.

Use Cases

The ability of quantization to reduce data size while preserving significant information makes it a helpful asset.

Let’s dive deeper into a few of its applications.

1. Image and Video processing

Images and video data have a broader range of parameters, significantly increasing computational complexity and memory footprint. Quantization compresses the data without losing important details, enabling efficient storage and processing. This speeds searches for images and videos.

2. Machine Learning Model Compression

Training AI models on large data sets is an intensive task. Quantization helps by reducing model size and complexity without compromising its efficiency.

3. Signal Processing

Signal data represents continuous data points like GPS or surveillance footage. Quantization maps data into discrete values, allowing faster storage and analysis. Furthermore, efficient storage and analysis speed up search operations, enabling faster signal comparison.

Different Quantization Techniques

While quantization allows seamless handling of billion-scale parameters, it risks irreversible information loss. However, finding the right balance between acceptable information loss and compression improves efficiency.

Each quantization technique comes with pros and cons. Before you choose, you should understand compression requirements, as well as the strengths and limitations of each technique.

1. Binary Quantization

Binary quantization is a method that converts all vector embeddings into 0 or 1. If a value is greater than 0, it is mapped to 1, otherwise it is marked as 0. Therefore, it converts high-dimensional data into significantly lower-dimensional allowing faster similarity search.

Formula

The Formula is:

Binary quantization formula. Image by author.

Here’s an example of how binary quantization works on a vector.

Graphical representation of binary quantization. Image by author.

Strengths

- Fastest search, surpassing both scalar and product quantization techniques.

- Reduces memory footprint by a factor of 32.

Limitations

- Higher ratio of information loss.

- Vector components require a mean approximately equal to zero.

- Poor performance on low-dimensional data due to higher information loss.

- Rescoring is required for the best results.

Vector databases like Qdrant and Weaviate offer binary quantization.

2. Scalar Quantization

Scalar quantization converts floating point or decimal numbers into integers. This starts with identifying a minimum and maximum value for each dimension. The identified range is then divided into several bins. Lastly, each value in each dimension is assigned to a bin.

The level of precision or detail in quantized vectors depends upon the number of bins. More bins result in higher accuracy by capturing finer details. Therefore, the accuracy of vector search also depends upon the number of bins.

Formula

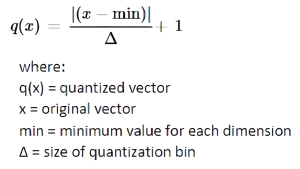

The formula is:

Scalar quantization formula. Image by author.

Here’s an example of how scalar quantization works on a vector.

Graphical representation of scalar quantization. Image by author.

Strengths

- Significant memory optimization.

- Small information loss.

- Partially reversible process.

- Fast compression.

- Efficient scalable search due to small information loss.

Limitations

- A slight decrease in search quality.

- Low-dimensional vectors are more susceptible to information loss as each data point carries important information.

Vector databases such as Qdrant and Milvus offer scalar quantization.

3. Product Quantization

Product quantization divides the vectors into subvectors. For each section, the center points, or centroids, are calculated using clustering algorithms. Their closest centroids then represent every subvector.

Similarity search in product quantization works by dividing the search vector into the same number of subvectors. Then, a list of similar results is created in ascending order of distance from each subvector’s centroid to each query subvector. Since the vector search process compares the distance from query subvectors to the centroids of the quantized vector, the search results are less accurate. However, product quantization speeds up the similarity search process and higher accuracy can be achieved by increasing the number of subvectors.

Formula

Finding centroids is an iterative process. It uses the recalculation of Euclidean distance between each data point to its centroid until convergence. The formula of Euclidean distance in n-dimensional space is:

Product quantization formula. Image by author.

Here’s an example of how product quantization works on a vector.

Graphical representation of product quantization. Image by author.

Strengths

- Highest compression ratio.

- Better storage efficiency than other techniques.

Limitations

- Not suitable for low-dimensional vectors.

- Resource-intensive compression.

Vector databases like Qdrant and Weaviate offer product quantization.

Choosing the Right Quantization Method

Each quantization method has its pros and cons. Choosing the right method depends upon factors which include but are not limited to:

- Data dimension

- Compression-accuracy tradeoff

- Efficiency requirements

- Resource constraints.

Consider the comparison chart below to understand better which quantization technique suits your use case. This chart highlights accuracy, speed, and compression factors for each quantization method.

Image by Qdrant

From storage optimization to faster search, quantization mitigates the challenges of storing billion-scale parameters. However, understanding requirements and tradeoffs beforehand is crucial for successful implementation.

For more information on the latest trends and technology, visit Unite AI.

Credit: Source link